As part of a new ASP.NET Core project that I've been blogging about recently, my team and I have reached the phase where we need to get the project onto some of our development servers, not just running on our local machines.

Problem is, I've been having a hell of a time getting our Azure DevOps system to correctly deploy this site. Once I'd worked out the myriad issues with our YAML deployment system, I was left to determine why our app couldn't talk to its dev database.

Turns out, I hadn't set the ASPNETCORE_ENVIRONMENT variable properly on our development servers, and to do so with our setup required a bit of black magic and a visit from an old friend.

Journey to the Real Problem

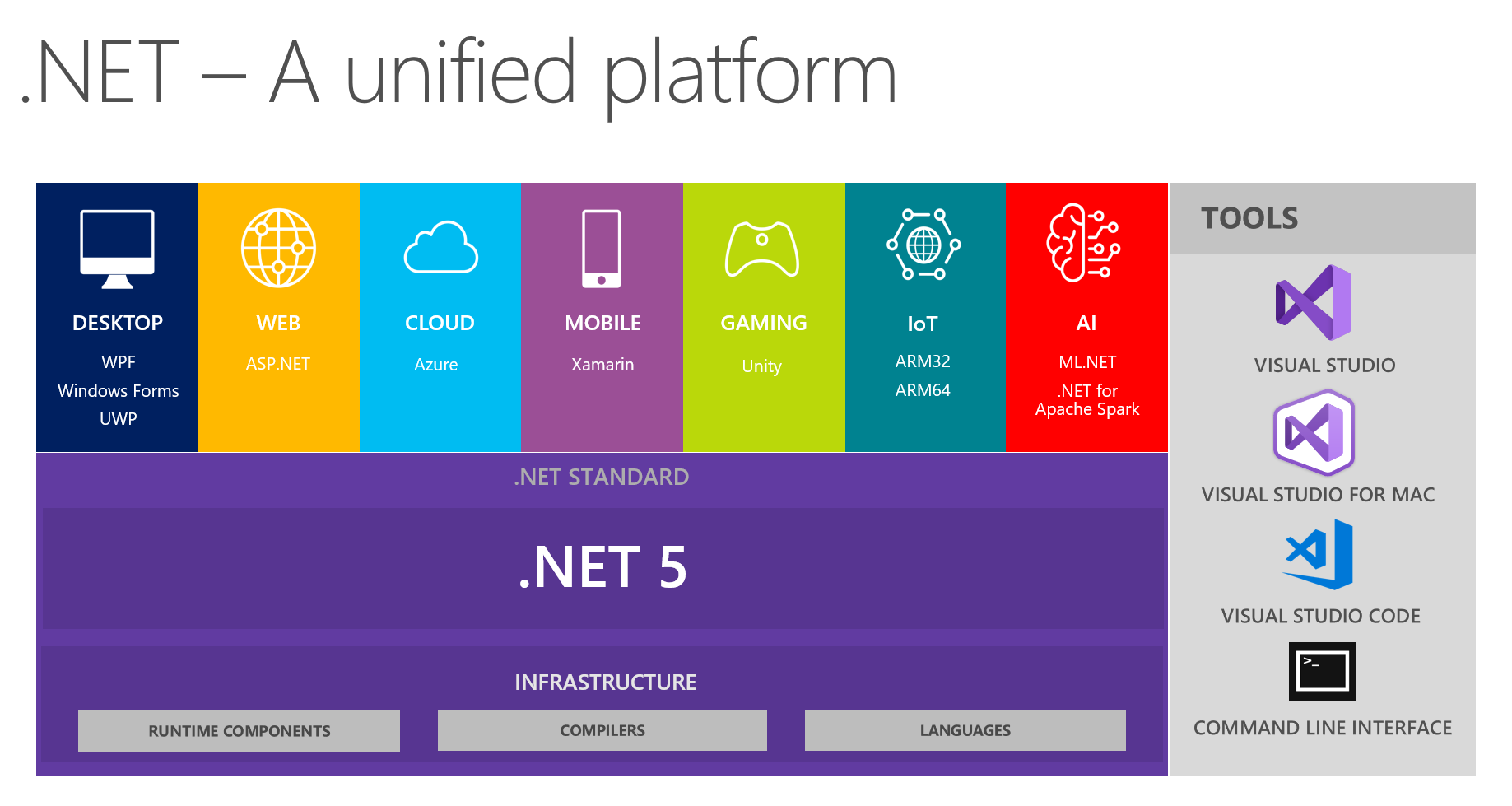

The app that we are trying to deploy is an ASP.NET Core 3.1 application, and our server cluster are all Windows servers running IIS.

I found out, rather suddenly, that our project was the trailblazer app for these servers; no one had tried to deploy an ASP.NET Core application onto them up to this point.

This was news to me, as I could have sworn someone else on my same team had already had these issues. It turned out later that I was both right and wrong at the same time.

No matter, I thought. This can't be that hard. Longtime readers will know that when I think that, things never seem to go well.

It was, in fact, hard. YAML kicked my butt, and to top it off, when I finally got the app deployed to the correct location, I received the following error:

Some pair-programming with my server team later, we found out that .NET Core had not actually been installed on these servers yet. So much for there being another app that had already done this. Server admin Raul fixed this issue for me, and we were in business.

Except, not really. Now we had a different issue: the app couldn't actually talk to its database.

Connecting is Hard

This particular app has two available databases; one in a dev environment that we use for things like local debugging and integration testing, and the official prod one.

It also has three environments: development, staging, and production. In development and staging, the app should talk to the dev database; in production, it should talk to the prod one.

The app sets up an IDbConnection with the appropriate connection string, and then adds that connection to the services layer so that it can be injected; all of this happens in the app's Startup.cs file. That code looks something like this:

public void ConfigureServices(IServiceCollection services)

{

if(Environment.IsProduction())

{

services.AddScoped<IDbConnection>(db =>

new SqlConnection(Constants.ProdDatabaseConnectionString));

}

else

{

services.AddScoped<IDbConnection>(db =>

new SqlConnection(Constants.DevDatabaseConnectionString));

}Where the Constants values are ultimately sourced from an appsettings.json file.

The key issue is the first if statement, the one that checks to see if we are running in the production environment. See, ASP.NET Core checks the value of an environment variable called ASPNETCORE_ENVIRONMENT in the environment where it runs, and if it doesn't find that variable, it assumes it is running in production.

That's what was happening here; the app assumed it was in production mode because it didn't find that variable and so tried to use the production connection string, but it was really in a dev environment which cannot even talk to the production database server.

Now I had an issue. After about four hours of fruitless debugging and Googling, I finally went to my teammates and described the issue. At which point, one of them remarked:

"Oh, yeah, we've seen that before. You have to set it in a web.config file."

Which absolutely blew my mind. How did they know that if I was writing the trailblazer application? Turns out, they had already written one, but it was deployed to an entirely different server cluster.

What's Old Is New Again

But that wasn't really what bothered me. I was under the impression that one of the reasons ASP.NET Core was so damn cool was that we didn't need to use these antiquated XML files any more.

It turns out I was half right; if we didn't need to set the ASPNETCORE_ENVIRONMENT variable, we would not need the web.config files at all.

That said, my teammate showed me how they set up their application to do this. Their web.config file was pretty short:

<configuration>

<system.webServer>

<handlers>

<remove name="aspNetCore" />

<add name="aspNetCore" path="*" verb="*" modules="AspNetCoreModuleV2" resourceType="Unspecified" />

</handlers>

<aspNetCore processPath="dotnet" arguments=".\AppName.dll" stdoutLogEnabled="false" stdoutLogFile=".\logs\stdout">

<environmentVariables>

<environmentVariable name="ASPNETCORE_ENVIRONMENT" value="Development" />

</environmentVariables>

</aspNetCore>

</system.webServer>

</configuration>Once I had this file deployed to the server, the application just worked! It set ASPNETCORE_ENVIRONMENT to "Development" and the correct connection string was used in my app. It was like magic, albeit old and unsavory black magic.

In all seriousness, this did feel like going backward. I really thought I wouldn't have to deal with web.config files anymore once we moved to ASP.NET Core. But hey, our app works in the dev environment now, and quite frankly that's all that matters.

Summary

In our environment (Windows servers, running IIS, hosting ASP.NET Core 3.0 apps), we needed a web.config file in the app to correctly set the ASPNETCORE_ENVIRONMENT variable so that the app would run in the preferred environment.

I doubt I'm the only person out there who has had this problem, and if you've needed to do something like this, please feel free to tell me about it in the comments!

Happy Coding!